Hebo

JANUARY - MAY 2018

Post-operative assistant for Mohs surgery patients.

See our final report or see the code repository.

- For our HCI undergraduate capstone, we worked with UPMC Dermatology (Pittsburgh, PA) as our client to develop a post-operative assistant (chatbot) -- Hebo.

- Conducted user research (contextual inquiries, expert interviews, diary study) to better understand the needs of the stakeholders and painpoints of the users.

- Designed and developed low, mid, and high prototypes. Constantly sought out feedback from our client, advisers, and users (patients) to iterate our prototypes.

- Worked as the technical lead: researched appropriate technologies, developed the architecture of our post-operative assistant, and developed a hi-fi prototype for Android.

OVERVIEW

Our team of 5 worked with UPMC Dermatology (Falk) to address the problem of patients struggling to follow post-operative care instructions. Since skin cancer patients (Mohs surgery specifically) are typically outpatients--patients who do not stay overnight in a hospital--they need to understand how to best take care of themselves after their surgery. A larger scope that our client presented us with is how to scale a solution to the context beyond UPMC so that hospitals around the nation can adopt such a solution. With this in mind, our semester long project focused on conducting user research to understand the context better, creating prototypes to demonstrate our solution(s) to users and our client, and developing a hi-fi product and continuance plan for our client to further develop on.

A typical post-operative care sheet will look something like this. Patients sometimes find the instructions intimidating and difficult to follow, so they call in after hours to nurses to ask follow-up questions. Furthermore, if instructions aren't followed properly, serious health concerns and consequences could arise.

PROCESS

User Research

Due to the crunch of the timeline we were given, the core of our research phase lasted for a little over 2 weeks. In those weeks, we conducted user research in the following ways:

- Literature review to understand the current state of post-operative care and patient adoption for technologies

- Contextual inquiries with Mohs surgery nurses, technicians, and doctors at UPMC Falk and UPMC St. Margaret

- User interviews with patients during downtime of surgeries

- Diary study for patients to document their thoughts and questions during the post-operative stage

Our research findings could be boiled down to the following insights:

- Doctors think that they are providing patients with all of the information they need, but this is not always the case.

- Patients look for greater reassurance and personalized care from the post-operative process.

- Patients can become self-conscious and concerned about their appearance after undergoing procedures.

- Nurses feel that some post-operative calls are redundant.

- The predominant demographic of skin cancer patients, the elderly population, calls for an emphasis on accessibility and simplicity.

To read more about these insights and our process, see our research report. With these insights, we proceeded to develop lo-fi prototypes of a solution to the post-operative care issue.

Prototyping

For the rest of the project, we worked on creating various prototypes to address the solution and tested these prototypes with both patients and hospital staff. Low, mid, and high prototypes were produced:

Brainstorming & low-fi -- created storyboards to illustrate three main prototype ideas: simulation training during operation downtime, customized instructions based on patient history, and a mobile application that uses machine learning to recommend post-operative instructions. After reviewing these prototypes with our client and advisers, we narrowed down to two main ideas to prototype:

- Chatbot -- tested prototype by testing a chatbot script that supported various scenarios, and pretended to be the agent.

- Simulation -- tested prototype by creating a sample educational module and conducted a think-aloud.

Full low-fi report available upon request. See here for personas, storyboards, and prototypes from this stage.

Mid-fi prototype -- combined feedback from low-fi prototype sessions to merge elements of the chatbot and simulation to a single application. This application is essentially a chatbot that served educational content when appropriate. We named the chatbot Hebo, short for health bot. Researched and designed ways to scale the solution as well during this stage. We then built out a basic Android application to test this mid-fi prototype. The majority of the prototype was hard-coded for rapid prototyping purposes. We then sought feedback from someone who has had several Mohs surgery procedures done in the past.

Key insights:

- Hebo's knowledge base should extend beyond the post-operative sheet -- we found that a chatbot that did not offer additional help beyond the post-operative sheet was not very beneficial since it did not offer any extra benefits.

- Answers need to be more targeted and personalized to improve accuracy -- users found the generic, impersonal responses not helpful since the answers were only tangential to their questions. Inaccuracies were also common since the questions patients asked were not directly addressed by our response bank.

- Personalizing care instructions for the user creates engagement -- patients feel more in tune with the agent if the responses are directed more towards them as an individual.

- Focus on usability improvements to minimize awkwardness and confusion -- the majority of users are from the elderly population, so adopting a new technology, such as a chatbot on a mobile application, is still a barrier to overcome.

- Discrepancies can cause distrust of the agent -- when information is misaligned with the user's knowledge, a distrust of the chatbot is instilled and difficult to overcome.

See here for mid-fi report.

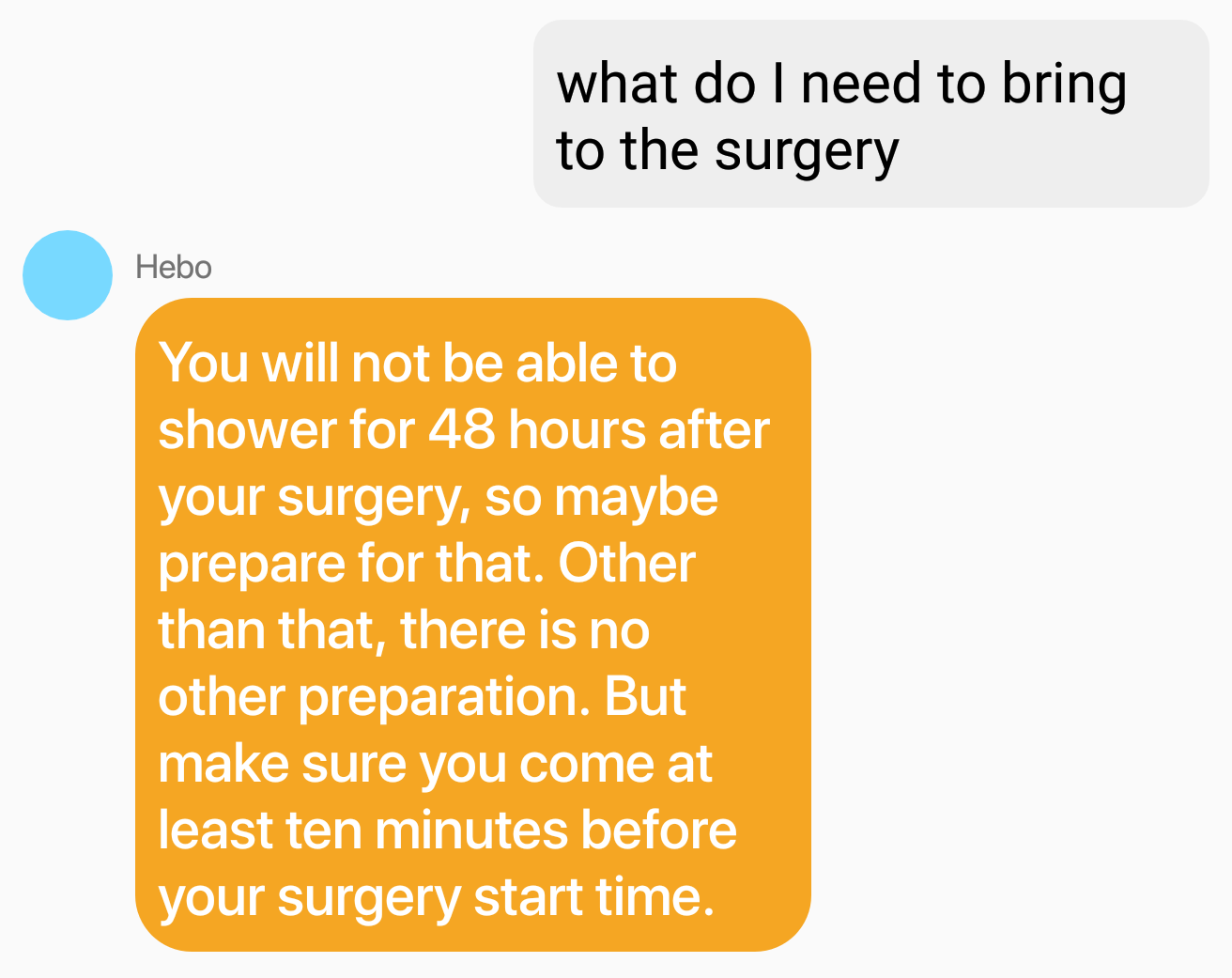

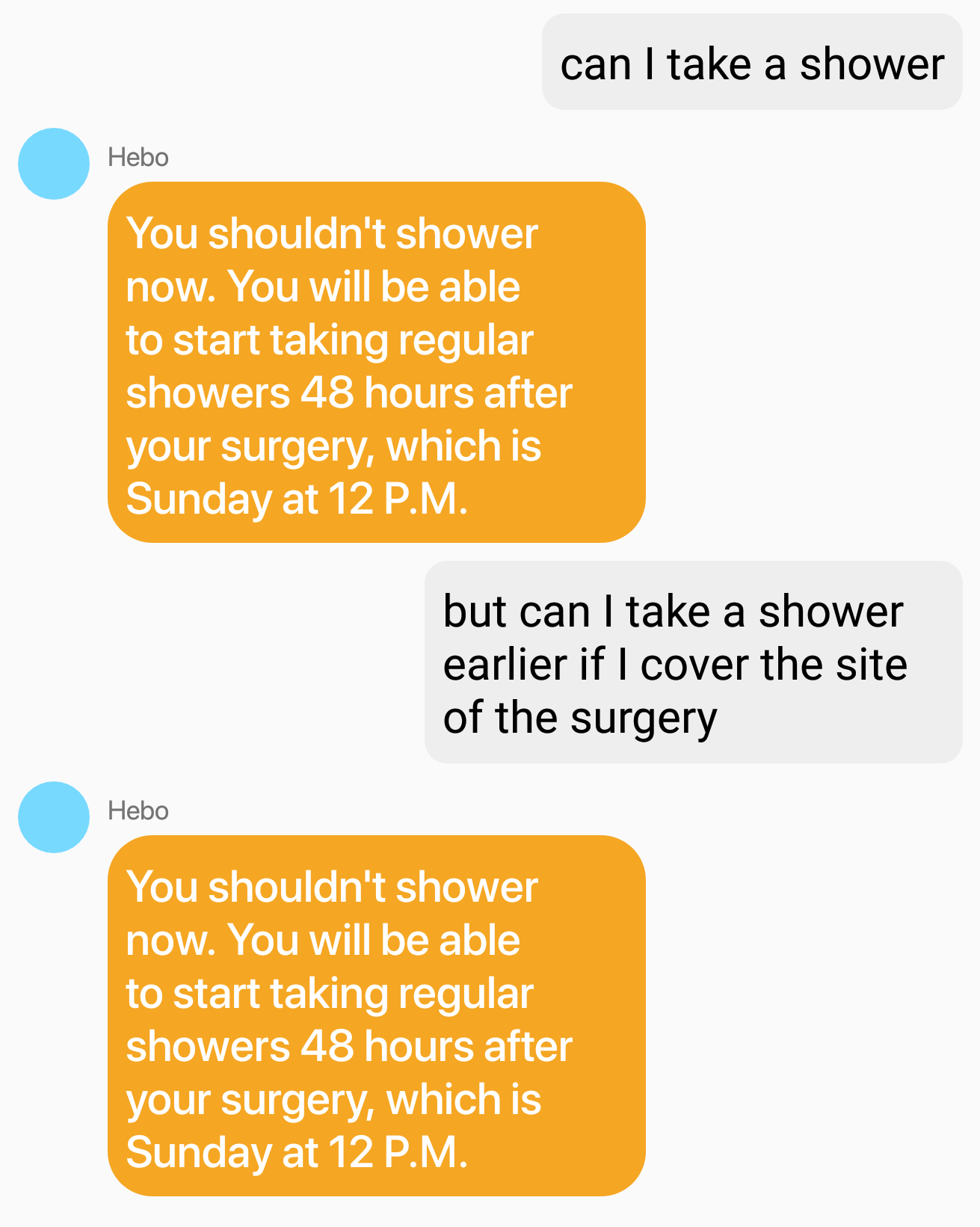

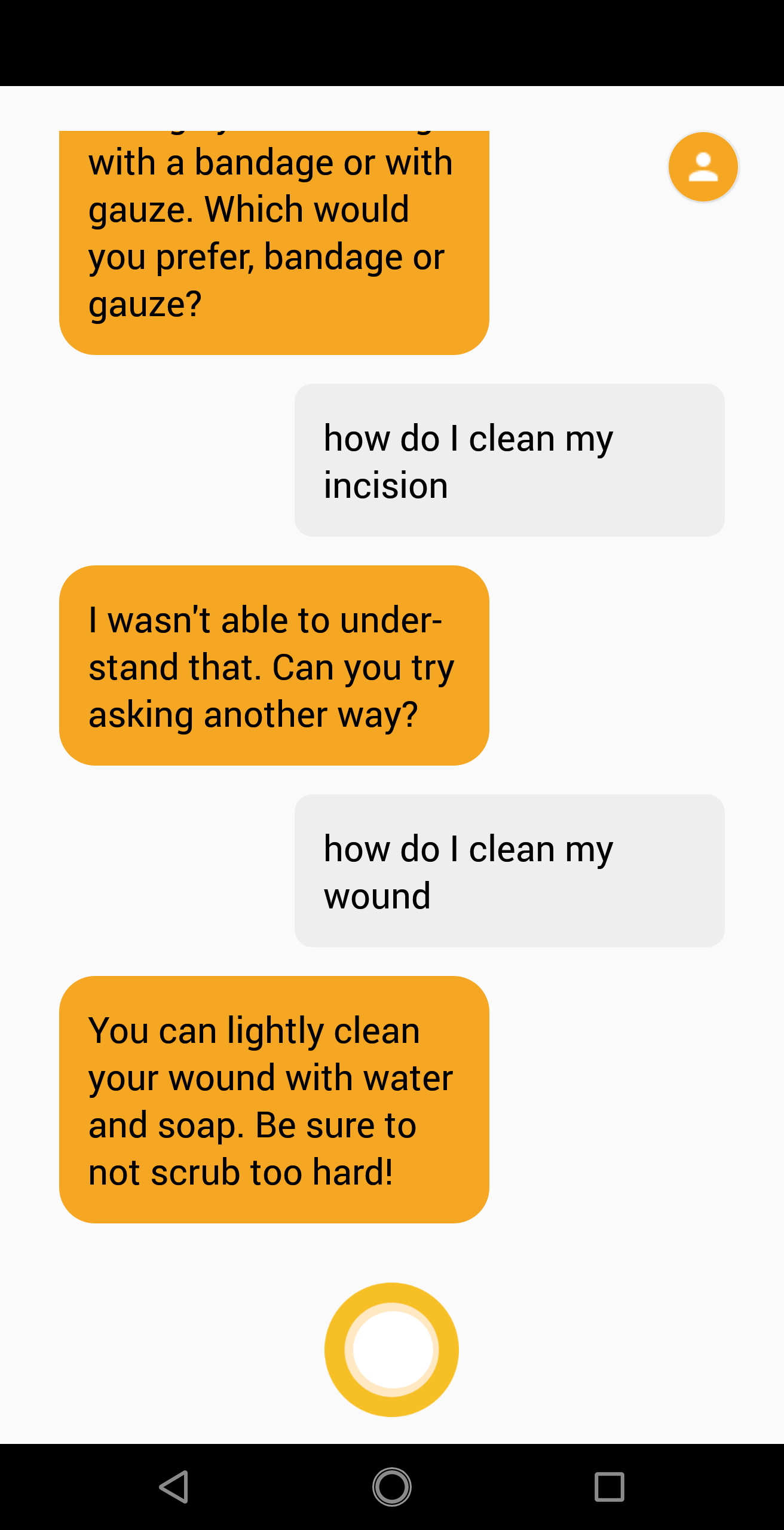

Misunderstanding inquiries

Hebo often misunderstood patient inquiries, causing confusing responses to be given.

Limited responses

We found that having a limited set of responses strongly worked against the interaction between patients and Hebo. This limit creates distrust and frustration for users. We addressed this by expanding the conversation tree that Hebo could understand and respond with.

Hi-fi prototype -- based on feedback from our mid-fi, we knew we were going down the correct path, but that the solution needed to be developed fully for the benefits to become evident. During this last phase of developing our final prototype, we focused on creating a flexible (not hard-coded) prototype that served as an example for future developments of the chatbot. We then focused a lot of efforts for documentation to allow our client to expand on this project in the future. We addressed issues from the mid-fi prototype by

- Developing the chatbot's knowledge base (questions it understands and responses it can give) so that Hebo understood more.

- Creating more follow-up dialogues that narrowed the user's question, so that Hebo can provide more accurate responses.

- Introducing personalized responses to the user based on area of surgery, time of surgery, etc.

- Providing visual responses to supplement post-operative instructions.

- Using mobile features (e.g. timer) to create a more interactive agent.

- Adding error handling by following-up answers that are unclear to the agent. If the agent does not understand after awhile, Hebo recommends the user to contact the nurse or doctor as a last resort. Errors are also caught based on previous conversations that Hebo has with the user (e.g. Hebo knows not to give the same response twice).

Visual responses

Visual responses allow for users to better understand the mental model of doctors and nurses.

Error handling

Hebo handles error better by asking users to rephrase their questions.

Software Design and Development

Researching the appropriate technologies for a solution remained a nontrivial task. A semester of research, design, and development would mean that our solution would likely be incomplete by the end of the 5 months. We knew from the beginning that our solution needed to scale after the capstone ended, and that our client should be able to support our efforts even after we graduate. As we narrowed down on the chatbot concept, I started to look at various technologies that supported a user friendly interface to create a chatbot.

Google's Dialogflow was the perfect solution for this. It allowed for a mix of accessibility for those who may have never touched code (in our case our client), and the power of adaptability for those who have more experience with code and who can further develop a custom chatbot from it. Dialogflow allows for users to interact with their dashboard to create user intents (think of these as questions that users could potentially ask a chatbot) and responses (think of these as the various responses that the chatbot should respond with once a given intent is identified). The huge benefit of using this tool is that the natural language processing of everyday conversations is ultimately handled by Google's AI technologies and efforts.

We also knew we wanted to adopt a technology to serve our agent on. Mobile devices made the most sense to us since the adoption of mobile is increasing as the years go by, even with older generations. In the future when more middle aged people who currently are familiar with cellphones and tablets transition to an elder age, they will have less adoption issues as we currently have. From our literature reviews, we know that elderly populations often face issues with audio, vision, motion, and cognition, so these factors were considered when we developed the design of our application. For example, we make it voice interactive to talk to Hebo (rather than typing a question) since this is what feels most natural to the elderly, we provide both audio and visual feedback for those using Hebo in case the user struggles with their senses, and we de-clutter the screen of Hebo to just one button and the chat history to reduce cognitive load.

We also knew that our client used a Samsung Galaxy phone, making his everyday usage of mobile phones an Android user. Because the application will likely be prototyped and tested after we leave, we needed to make sure our client could install and use Hebo on his device without us being there. Because of this, we developed an Android application, optimizing for his device.

In order to foster more advanced interactions between the user and Hebo, we needed to host a webhook that processed more complex logic for the responses. This webhook allowed Dialogflow and our mobile application to interact in more intelligent ways: for example, identifying that the user has had surgery on the neck from the mobile app, and serving a specific response to keep the head elevated when lying down from Dialogflow. We also used webhooks to communicate a visual response back to the mobile application so that Hebo knew which image to serve to the user. The interaction essentially flowed from the user to Hebo through the mobile application, which then contacted Dialogflow with the user's request, Dialogflow took this request and based on the conversation tree programmed identified a specific intent--with this intent either a direct response would be given or the webhook would be contacted and would then send back the appropriate responses to the mobile app. The mobile application knew to either return the response directly (if it was text), or start the appropriate feature for that given response (e.g. a timer to check back on the patient).

For the final prototype, we developed the conversation for bleeding, swelling, and wound care. However, there is still much more to develop for our client and his team. The technical documentation is included in our final report, which details how to proceed with each system.

System architecture: three main systems worked to communicate with each other. The interaction flow informally: User speaks to Hebo (mobile) > Hebo sends request to Dialogflow > Dialogflow chooses appropriate intent > Dialogflow contacts webhook with intent parameters if appropriate > Webhook returns appropriate response to Dialogflow > Dialogflow returns response to Hebo > Hebo chooses what to do with given response and serves to user.