PeerPresents

SEPT 2015 – MAY 2016

An online peer feedback system to improve the feedback process for in-class presentations. This project is an ongoing research project under Carnegie Mellon’s Human Computer Interaction Institute that aims to find the most effective feedback tool for students to utilize in classroom settings.

See the web application here. Or the published CHI paper here.

- Co-published a research paper for the CHI conference (2017)

- Supported and received over 6000 feedback comments to analyze

- Improved UI/UX of web application

- Designed and implemented an A/B test to deploy to classroom trials

- Worked in HTML, CSS, JS (node.js, express.js, jade (pug now), sockets.io), and MongoDB

- Digitized user data, assisted in data coding, and helped analyze a classroom case study

WEB DEVELOPER

PeerPresents

Improving UI/UX:

Our team’s designer came up with a new logo, button design and placement, color scheme, etc. For the first few weeks of being a web developer for this team, I was heavily involved with frontend work in order to make PeerPresents more presentable to our users. We needed to be able to deploy our system to classrooms and therefore wanted to focus on improvements of the UI.

• Provided feedback for the reworking of a new UI during weekly SCRUM sessions and team meetings

• Implemented frontend of new interface and design

• Changed application to be more mobile and tablet friendly

• Fixed layout issues throughout the system

Before

After

Implementing A/B test:

Towards the latter part of the semester, our team wanted to test new features to further the study of optimizing our tool. Brainstorming for this new topic was a process that took a few weeks, but in the end, we decided to test the impact of the tool’s interface on the user’s actions. In particular, we wanted to know whether a split version of an interface versus a combined version of an interface led to people commenting / voting more. For our system, people can comment their own thoughts. In addition, they can look at other feedback given from their peers and vote on those feedback. We decided to implement an AB-test where version A supported a split interface and version B supported a combined view. We hypothesized that the split display would lead to more commenting and less voting since switching between the tabs may be a deterring factor, and that the combined display would lead to less and more conservative commenting from users, yet more votes for other comments.

Our team brainstormed the design of the whole test and the interface of the new system in the first few weeks. Afterwards, it was a matter of implementing the two versions in our existing code-base. We randomly assigned the students to either group A or group B. Their account names were tracked in our database in order to figure out the switching of the groups. So for the first 2 weeks students in group A witnessed a split view of the interface, while the rest of the students spent it in group B with the combined views. After 2 weeks, we simply switched the students to the opposite group.

• Brainstormed designs for the A/B test (infrastructure, interface, experience, etc.)

• Participated in team discussions involving the research aspects of the test

• Implemented the backend and frontend of the new system

• Deployed the test to a classroom over the course of two weeks (fixed bugs along the way)

Version A, questions tab

Version B, vote tab

Version B, questions & vote

Quality Assurance:

Our web application needed to be fully functional for our classroom deployments. We planned to deploy to a particular class and have them use PeerPresents versus handwritten notecards for feedback. This process of deploying meant that we had to focus on any bugs present in our system.

• Fixed bugs involving UX (providing feedback for actions done by users, adjusting platform for mobile, etc.)

• Participated in bug crawls/bashes with the team in order to “break” our system

• Tested for traffic intensity and stability of PeerPresents

• Fixed issues involving our server crashing

RESEARCH ASSISTANT

In spring of 2016, my role in Peer Presents involved less development, and more analysis of our datasets. After deploying to two classrooms in the fall semester, our team gathered over 6000 real-time feedback comments from actual students in a classroom setting.

• Coded datasets in order to validate the consensus of our results

• Discussed the implications of our data in a team setting every week

• Digitized large datasets of feedback comments in order to better analyze our results

• Organized datasets in meaningful ways using Microsoft Excel in order to visualize our findings

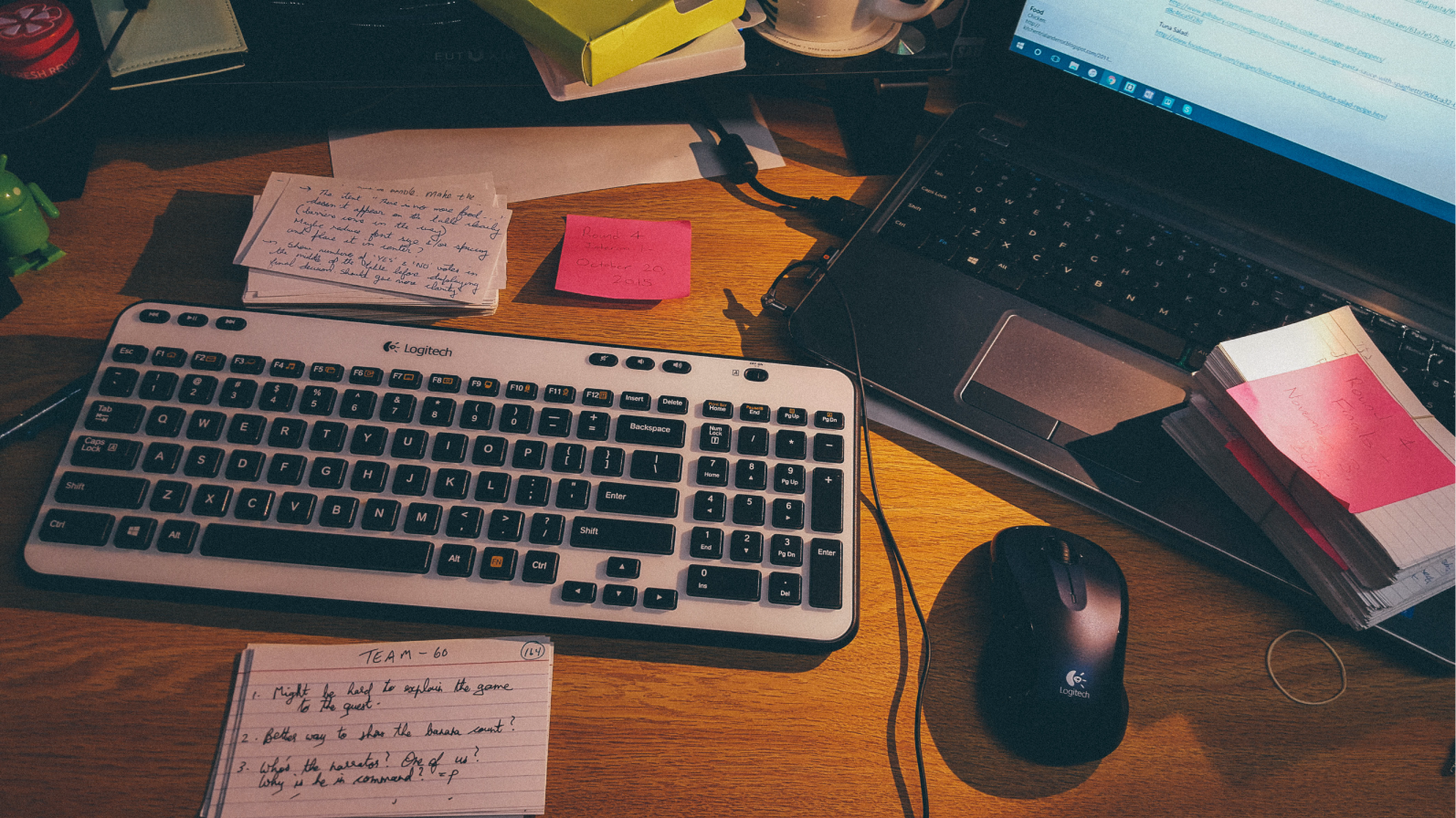

Going through physical feedback cards to understand a non-digital way of providing feedback for peers